It is best practice to create clusters with at least three nodes to guarantee reliability and efficiency

Every cluster has one master node, which is a unified endpoint within the cluster, and at least two worker nodes

Here, we discover some points of nodes and clusters. Let’s begin

Nodes and clusters are two of the most important concepts in the field of computer networking and systems design. In this article, we will explore these two concepts in detail, discussing what they are, how they are used, and how they can benefit organizations and individuals.

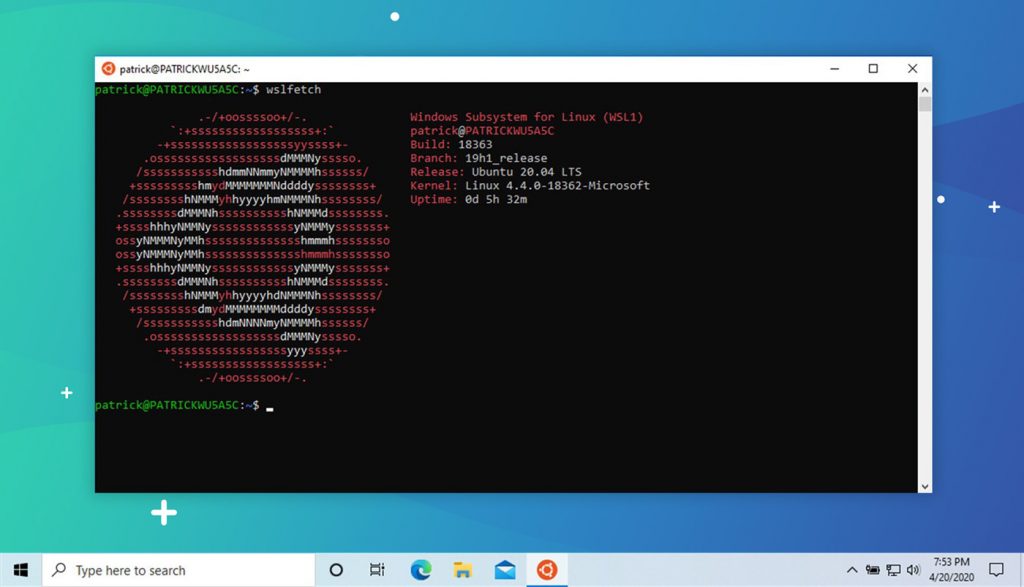

First, let’s start with nodes. Nodes are individual components in a computer network that are connected to one another. They are typically physical devices, such as servers, workstations, or other types of computer systems. Nodes can also refer to software components that exist within a larger system, such as a web server, database server, or other type of software application.

Nodes play a critical role in computer networks because they allow information to be transmitted and processed between multiple devices. They help to distribute processing power and memory, which can make networks more efficient and scalable. Additionally, nodes can help to reduce downtime and improve overall network reliability. For example, if one node fails, the other nodes in the network can continue to operate normally, ensuring that critical services and data remain available.

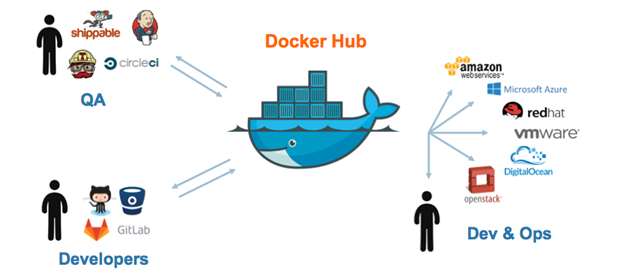

Next, let’s discuss clusters. A cluster is a group of interconnected nodes that work together to perform a specific task or set of tasks. Clusters are often used to achieve high levels of performance, reliability, and scalability. Clusters can be used in many different contexts, including high-performance computing, database management, and web services.

One of the key benefits of clusters is that they allow organizations to increase their computing power by adding additional nodes. This can be particularly useful for organizations that need to process large amounts of data, as it allows them to scale their computing resources dynamically to meet changing demand. Clusters can also help to improve network reliability, as they allow services and data to be automatically distributed across multiple nodes in the event of a failure. This reduces downtime and ensures that critical services remain available even if one or more nodes fail.

There are several different types of clusters that can be used for different purposes, including load-balanced clusters, high-availability clusters, and compute clusters. Load-balanced clusters are used to distribute processing loads across multiple nodes, helping to improve network performance and scalability. High-availability clusters are used to ensure that critical services remain available even if one or more nodes fail, while compute clusters are used for scientific and engineering applications that require large amounts of computing power.

In conclusion, nodes and clusters are critical concepts in computer networking and systems design that play important roles in ensuring network efficiency, scalability, and reliability. Whether you are an individual user or a large organization, understanding nodes and clusters is essential for maximizing your computing resources and ensuring that your data and services are available when you need them. With their ability to improve performance, reduce downtime, and increase scalability, nodes and clusters will continue to play important roles in the world of computing for many years to come.

REF

https://www.onixnet.com/insights/nodes-vs-clusters?hs_amp=true

Khoá học lập trình game con rắn cho trẻ em

Khoá học lập trình game con rắn cho trẻ em